Computing Systems: Difference between revisions

| Line 132: | Line 132: | ||

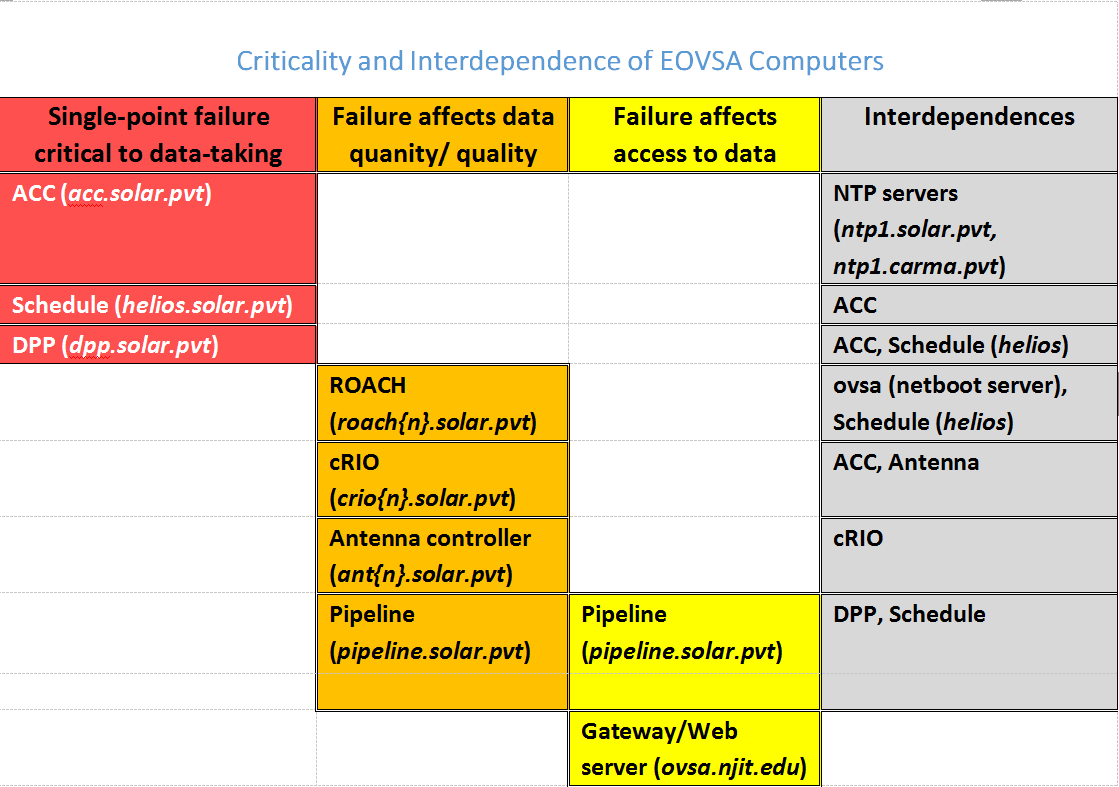

== Interdependencies of the EOVSA Computers == | == Interdependencies of the EOVSA Computers == | ||

In order for the various computers in the system to do their jobs effectively, there are certain interdependencies that are built in to the infrastructure. It is important to clarify these and make sure that they are as limited as possible in order to allow the system to function when non-critical infrastructure is down for maintenance or repair.</ | In order for the various computers in the system to do their jobs effectively, there are certain interdependencies that are built in to the infrastructure. It is important to clarify these and make sure that they are as limited as possible in order to allow the system to function when non-critical infrastructure is down for maintenance or repair.<br /> | ||

[[File | [[File:Figure 2.png|none]] | ||

The above table summarizes the interdependencies of the computers in the EOVSA system. The critical computers for control of the system are acc, helios and dpp. Basic control and data-taking are not possible without these three machines being operational. Still important, but not critical, computers (i.e. not single-point failures) are the cRIOs in the antennas, the antenna controllers themselves, and the ROACH boards. In general, the system should be capable of operating without one or more of these systems. Note that losing a ROACH board means that the two antennas input to that board are lost, and additionally all frequency channels (1/8th of each 500-MHz band) handled by that board’s X-engine are also lost. In terms of impact, then, an individual ROACH board is more critical than an individual cRIO or antenna controller. Next in importance is pipeline, which has a role not only in real-time data products and interim-to-archival processing, but also in certain near-real-time calibration processing. Although the interim database can be created without pipeline, the quality of the interim database may be compromised. Finally, tawa is off the critical path and should not generally affect any aspect of data-taking or data-processing. The gateway/web server, ovsa, could be critical in providing access to the private network from outside, although in case of ovsa failure tawa could be pressed into service by merely plugging it into the WAN and possibly making some changes in the DHCP/firewall settings at the site. It may be worthwhile to put into place preparations (and advance testing) to make this option as quick and easy as possible. | The above table summarizes the interdependencies of the computers in the EOVSA system. The critical computers for control of the system are acc, helios and dpp. Basic control and data-taking are not possible without these three machines being operational. Still important, but not critical, computers (i.e. not single-point failures) are the cRIOs in the antennas, the antenna controllers themselves, and the ROACH boards. In general, the system should be capable of operating without one or more of these systems. Note that losing a ROACH board means that the two antennas input to that board are lost, and additionally all frequency channels (1/8th of each 500-MHz band) handled by that board’s X-engine are also lost. In terms of impact, then, an individual ROACH board is more critical than an individual cRIO or antenna controller. Next in importance is pipeline, which has a role not only in real-time data products and interim-to-archival processing, but also in certain near-real-time calibration processing. Although the interim database can be created without pipeline, the quality of the interim database may be compromised. Finally, tawa is off the critical path and should not generally affect any aspect of data-taking or data-processing. The gateway/web server, ovsa, could be critical in providing access to the private network from outside, although in case of ovsa failure tawa could be pressed into service by merely plugging it into the WAN and possibly making some changes in the DHCP/firewall settings at the site. It may be worthwhile to put into place preparations (and advance testing) to make this option as quick and easy as possible.<br /> | ||

Certain disks should be accessible via nfs from multiple machines, but care should be taken with nfs to avoid loss of a non-critical machine causing problems with a critical one. Therefore, unless otherwise required, the cross-mounting should be limited to pipeline having r/o access to dpp, and tawa having r/o access to dpp and pipeline. | Certain disks should be accessible via nfs from multiple machines, but care should be taken with nfs to avoid loss of a non-critical machine causing problems with a critical one. Therefore, unless otherwise required, the cross-mounting should be limited to pipeline having r/o access to dpp, and tawa having r/o access to dpp and pipeline. | ||

Revision as of 04:04, 27 September 2016

Overview of EOVSA Computing Systems

The computing infrastructure for EOVSA has been developed largely according to the initial plan, which calls for multiple computers dedicated to specific tasks. This document describes the computing hardware specifications and capabilities, role of each computer in the system, and interdependences among them. A schematic summary is shown in Figure 1.

Specifications of Each System

In this section we detail the system specifications, for reference purposes. The systems are listed alphabetically by name.

acc.solar.pvt (192.168.24.10)

The Array Control Computer (ACC) is a National Instruments (NI) PXIe-1071 4-slot chassis with three units installed: NI PXIe-8133 real-time controller, NI PXI-6682H timing module, and NI PXI-8431 RS485 module. The relevant features of acc are:

- Real-Time PharLap operating system running Labview RT

- Quad-core i7-820QM, 1.73 GHz processor with 4 GB RAM

- High-bandwidth PXI express embedded controller

- Two 10/100/1000BASE-TX (gigabit) Ethernet – one port is connected to the LAN, and the other is dedicated to the Hitite synthesizer.

- 4 hi-speed USB, GPIB, RS-232 serial, 120 GB HDD

- Rackmount 4U Chassis

dpp.solar.pvt (192.168.24.100)

The Data Packaging Processor (DPP) is a Silicon Mechanics Rackform nServ A331.v3 computer with 32 cores. The main features of dpp are:

- Two Opteron 6276 CPUs (2.3 GHz, 16-core, G34, 16 MB L3 Cache)

- 64 GB RAM, 1600 MHz

- Intel 82576 dual-port Gigabit ethernet controller

- Two 2-TB Seagate Constellation SATA HDD

- Myricom 10G-PCIE2-8B-2S dual-port 10-GB Ethernet controller with SFP+ interface

- Ubuntu 12.04 LTS (long-term support) 64-bit operating system

- Rackmount 1U Chassis

helios.solar.pvt (192.168.24.103)

The Scheduling and Fault System computer is a Dell Precision T3600 computer. The main features of helios are:

- Intel Xeon E5-1603 CPU (4-core, 2.8 GHz, 10 MB L3 Cache)

- 8 GB RAM, 1600 MHz

- Two 2-TB SATA HDD

- 512 MB NVidia Quadro NVS 310 dual-monitor graphics adapter

- Dell Ultrasharp U2312H 23-inch monitor

- 16xDVD+/-RW optical DVD drive

- Integrated gigabit Ethernet controller

- Ubuntu 12.04 LTS (long-term support) 64-bit operating system

- Free-standing Tower Chassis

monitor.solar.pvt

The RDBMS server is a Dell PowerEdge server. The main features of monitor are:

- Intel Xeon E5-2420 CPU (1.90 GHz, 6-core, 15 MB L3 Cache)

- 16 GB RAM, 1600 MHz

- One 1-TB SATA HDD (operating system)

- Eight 4-TB HDD in RAID ? configuration

- Integrated Broadcom 5270 quad-port Gigabit Ethernet controller

- DCD ROM optical drive

- Windows 7 64-bit operating system

- SQLserver

- Rackmount 1U Chassis

ovsa.njit.edu (192.100.16.206)

The Gateway/Web Server is an older machine (ordered 1/12/2011). The DELL service tag number is FHDD8P1. The main features of ovsa are:

- Intel Xeon W3505 (2.53 GHz, dual-core, 8 MB L3 Cache)

- 4 GB RAM, 1333 MHz

- 250 GB SATA HDD (operating system) + 250 GB data disk + 500 GB archive disk

- 512 MB Nvidia Quadro FX580 dual-monitor graphics adapter

- Dell U2211H 21.5-inch monitor

- 16X DVD+/-RW optical DVD drive

- Broadcom NetXtreme 57xx Gigabit Ethernet controller

- Ubuntu 12.04 LTS (long-term support) 32-bit operating system

- Free-standing Tower Chassis

pipeline.solar.pvt (192.168.24.104)

The Pipeline Computer is a Silicon Mechanics Storform iServ R513.v4 computer with 20 cores. Pipeline is the most powerful of the EOVSA computers, and is meant to handle the real-time creation of data products. The main features of pipeline are:

- Two Intel Xeon E5-2660v2 CPUs (2.2 GHz, 10-core, 25 MB L3 Cache)

- 128 GB RAM, 1600 MHz

- Integrated dual-port Gigabit Ethernet controller

- LP PCIe 3.0 x16 SAS/SATA controller

- Twelve 4-TB Seagate Constellation SATA HDD configured as RAID 6 with hot spare (36 TB usable)

- Ubuntu 12.04 LTS (long-term support) Server Edition 64-bit operating system

- Rackmount 2U Chassis

roach{n}.solar.pvt (192.168.24.12{n})

The eight ROACH2 boards each have a Power-PC CPU, with hostnames are roach1.solar.pvt, roach2.solar.pvt, … roach8.solar.pvt. The receive their operating systems via NFS netboot from the Gateway/Web Server computer ovsa.njit.edu. The CPUs on the roaches are mainly for interacting with the on-board FPGAs, which are programmed to run the correlator design.

tawa.solar.pvt (192.168.24.105)

The Analysis Computer is essentially a clone of the DPP Computer, a Silicon Mechanics Rackform nServ A331.v4 computer with 32 cores. The main features of tawa are:

- Two Opteron 6276 CPUs (2.3 GHz, 16-core, G34, 16 MB L3 Cache)

- 64 GB RAM, 1600 MHz

- Intel 82576 dual-port Gigabit ethernet controller

- Two 2-TB Seagate Constellation SATA HDD, in a RAID1 configuration.

- DVD+/-RW optical DVD drive

- Ubuntu 12.04 LTS (long-term support) Server Edition 64-bit operating system

- Rackmount 1U Chassis

sqlserver.solar.pvt (192.168.24.106)

The RDBMS computer is a Dell PowerEdge R520. It records the main monitor database, which can be queried using standard SQL queries. The computer was installed on 9/25/2014, and runs the Windows Server 2008 R2 Standard operating system. The main features of sqlserver are:

- Intel Xeon E5-2420 CPU (1.9 GHz, 6-core, 15MB Cache)

- 32 GB RAM, 1600 MHz

- Broadcom 5720 Quad-port Gigabit Ethernet controller

- Four 4-TB SAS Western Digital WD-4001FYYG HDD, in a RAID5 configuration (12 TB usable)

- iDRAC remote management card (IP address 192.168.24.108)

- Read-only optical DVD drive

- SQL Server 2012 Developer software

win1.solar.pvt (192.168.24.101)

The Windows PC is a clone of the Gateway/Web Server, purchased around the same time (ordered 5/19/2011), but runs the Windows 7 operating system, mainly for the purpose of running Windows-only utilities including monitoring the ACC and the antennas. The DELL service tag number is C303GQ1. The main features of win1 are:

- Intel Xeon W3530 (2.8 GHz, dual-core, 8 MB L3 Cache)

- 4 GB RAM, 1333 MHz

- Two 500 GB SATA HDD

- 512 MB Nvidia Quadro FX580 dual-monitor graphics adapter

- Dell U2211H 21.5-inch monitor

- 16X DVD+/-RW optical DVD drive

- Broadcom NetXtreme 57xx Gigabit Ethernet controller

- Windows 7, 32-bit operating system

- Free-standing Tower Chassis

Function/Purpose of Computers

In the cases of the acc, dpp, pipeline, and sqlserver, the system host name is suggestive of the main purpose of the computer, while helios and tawa are the names of mythological Sun gods. This section gives a somewhat detailed description of the function of each system.

ovsa.njit.edu

This is the web server and gateway computer, which is the only one that is on the Wide-Area-Network (WAN). It has no other function in the overall system except to permit outside users to connect to the private network (LAN) by tunneling. To gain access to machines on the private network, it is necessary to log in to ovsa via ssh, at the same time declaring a tunnel that specifically opens a relevant port through to the desired machine on the LAN. The protocol is to issue a command like:

ssh -L <local port>:<host>.solar.pvt:<desired port> <user>@ovsa.njit.edu

where <host> is the host name of the machine to tunnel to, on the solar.pvt LAN, <desired port> is the port you wish to reach, and <user> is the name of a user account on ovsa.njit.edu that you will use to log in and create the tunnel. The <local port> is the port you will connect to from your local machine. Once you have issued the above command and logged in, you must open a second connection to localhost:<local port>. As a concrete example, say I wish to log in to helios as user sched via ssh. I would issue the command

ssh –L 22:helios.solar.pvt:22 dgary@ovsa.njit.edu

where 22 is the usual ssh port. I would then (in a second window) issue the command

ssh sched@localhost

which defaults to port 22 since I am using ssh. Another example is to set up for a VNC connection to the dpp. For that, I would issue the command

ssh –L 5902:dpp.solar.pvt:5900 dgary@ovsa.njit.edu

and then open a VNC connection to localhost:2 (VNC defaults to adding 5900 to the port number, hence this would connect via port 5902). For users of the Windows operating system, I suggest the use of the excellent MobaXterm (http://mobaxterm.mobatek.net/), which allows such tunnels to be set up and saved, then executed as a one-click operation.

acc.solar.pvt

As its name implies, this is the Array Control Computer (ACC), which runs the supervisory LabVIEW code to communicate with the cRIO computer systems in each antenna, and to assemble and serve the 1-s stateframe. It provides a dedicated TCP/IP port (6341) from which each subsystem can connect and read stateframes of various “age” from the history buffer, from 0-9 seconds old. Stateframe 0 is the incomplete one being filled for the current second. It also supplies ports for the ACC to receive stateframe information from the schedule (port 6340) and the DPP (port 6344) for adding to the stateframe. In addition to talking to the cRIOs, it also controls the Hittite LO system, the subarray switches in the LO Distribution Module (LODM), and the downconverter modules (DCMs).

helios.solar.pvt

This machine has several functions. It is the computer that runs the schedule (for definiteness, only one schedule is allowed to run at a time), and as such it also must control the ROACHes at several time cadences. It initializes them on startup, it sends information about the frequency sequence once per scan, and it sends integer delays once per second. It also creates the scan_header data file to the ACC, which is read by the DPP at the start of each scan. And it creates and sends schedule information, including the exact delays, to the ACC for inclusion in the stateframe. The schedule is meant to run the same general sequence of commands every day automatically, so that unless some special configuration of the system is needed (such as for non-regular calibrations or system tests) it will continue to run for multiple days without intervention. It also must support all scheduling of calibration observations, but at least for now this is expected to be done semi-manually. The schedule may also eventually play a role in the monitor RDBMS, but this has not been fully defined as yet.

Another important function of helios is to run the fault system supervisory program. As of this writing, the fault system has not been implemented, but when functional it will examine the contents of the stateframe and create a parallel array of flags indicating problems, which various systems can examine and decide whether to alert the operator or take some corrective action.

Finally, helios also runs a version of the operator display (called sf_display for stateframe display), which can also run additional copies as desired on other machines. It currently works fine on external machines running either Linux or Windows, when they are properly set up for tunneling through ovsa.njit.edu.

It is anticipated that helios will have only a single user account, called sched.

dpp.solar.pvt

As the name implies, this is the Data Packaging Processor (DPP), which receives the raw 10-GBe UDP data packets from the ROACHes, processes them, and outputs the “interim database” Miriad files. It has a total of 4 TB of hard disk, which is sufficient for about 1 month of interim data under full EOVSA operation. If the interim data are to be kept, they will have to be transferred to the pipeline machine, and eventually to NJIT. The DPP sends its subsystem information to the ACC, which adds it to the stateframe. The DPP also reads the stateframe as well as the scan_header file and various calibration files residing on the ACC, in order to do the correct processing of the data into Miriad files. Once the interim data have been produced (each file containing roughly 2 minutes of data), control should pass to the Pipeline machine (pipeline.solar.pvt) for further processing into archival data bases as well as real-time data products. It will be necessary to use nfs to mount the dpp.solar.pvt disks on pipeline.solar.pvt. We should avoid nfs-mounting pipeline disks on dpp, however, so that the data-taking is not compromised when pipeline is down for some reason.

It is anticipated that dpp will have only a single user account, called user.

sqlserver.solar.pvt

This is the RDBMS computer responsible for recording and serving the monitor database (scan header and stateframe information). Its programming allows it to adapt seamlessly to multiple versions of the stateframe. Explicit methods of accessing, storing and querying data from Python are documented in Python_Access_to_Database.pdf. The main purpose of the database is to provide historical information about the state of the system for engineering purposes, and tracking down problems in the system. It is anticipated that certain summary web pages will be created that perform standard queries to provide an overview of the state of the system.

pipeline.solar.pvt

This is the Pipeline computer responsible for real-time processing of the interim data, which has several purposes: (1) To provide a continuous indication of the quality of the data and the state of solar activity by permitting display of light-curves, spectra, and images. These data-quality indicators should be put directly onto the web for public access, as well as for use as an operator console. (2) To generate the metadata and near-real-time data products, including ultimately coronal magnetic field maps and other parameters. These data products will go into a searchable database accessible via Dominic Zarro’s system. (3) To process certain daily calibration observations in an automatic way so that the results are available to the DPP for applying to the next scan’s interim data. (4) To process and/or reprocess the interim database into final archival databases, which will form the standard uv data to be used by scientists to create and analyze their own (non-real-time) data products. (5) In the case that the archival database calibration is somehow better than that available for the real-time processing, off-line reprocessing and recreation of the real-time data products will be done for archival purposes.

The 32 TB of RAID disk storage that are available on pipeline.solar.pvt should provide enough space for at least a year of interim data, and several years of archival data, depending on the as yet unknown data volume of the metadata and data products. A second copy of the archival data will be kept at NJIT, and possibly other sites.

Pipeline currently has only one user account, called user. It may have additional accounts for a few individuals who are responsible for developing pipeline’s software, such as Jim McTiernan and Stephen White.

tawa.solar.pvt

This is a general-purpose analysis machine, meant to provide for analysis capability that is off the critical data path. It should have access to pipeline’s disks via nfs, and should be capable of doing all of the Pipeline analysis as well as other tasks.

Tawa, as a general-purpose machine can have multiple user accounts. It may ultimately have a general guest account so that outside users can process limited jobs locally without having the full suite of software on their own computer.

Interdependencies of the EOVSA Computers

In order for the various computers in the system to do their jobs effectively, there are certain interdependencies that are built in to the infrastructure. It is important to clarify these and make sure that they are as limited as possible in order to allow the system to function when non-critical infrastructure is down for maintenance or repair.

The above table summarizes the interdependencies of the computers in the EOVSA system. The critical computers for control of the system are acc, helios and dpp. Basic control and data-taking are not possible without these three machines being operational. Still important, but not critical, computers (i.e. not single-point failures) are the cRIOs in the antennas, the antenna controllers themselves, and the ROACH boards. In general, the system should be capable of operating without one or more of these systems. Note that losing a ROACH board means that the two antennas input to that board are lost, and additionally all frequency channels (1/8th of each 500-MHz band) handled by that board’s X-engine are also lost. In terms of impact, then, an individual ROACH board is more critical than an individual cRIO or antenna controller. Next in importance is pipeline, which has a role not only in real-time data products and interim-to-archival processing, but also in certain near-real-time calibration processing. Although the interim database can be created without pipeline, the quality of the interim database may be compromised. Finally, tawa is off the critical path and should not generally affect any aspect of data-taking or data-processing. The gateway/web server, ovsa, could be critical in providing access to the private network from outside, although in case of ovsa failure tawa could be pressed into service by merely plugging it into the WAN and possibly making some changes in the DHCP/firewall settings at the site. It may be worthwhile to put into place preparations (and advance testing) to make this option as quick and easy as possible.

Certain disks should be accessible via nfs from multiple machines, but care should be taken with nfs to avoid loss of a non-critical machine causing problems with a critical one. Therefore, unless otherwise required, the cross-mounting should be limited to pipeline having r/o access to dpp, and tawa having r/o access to dpp and pipeline.